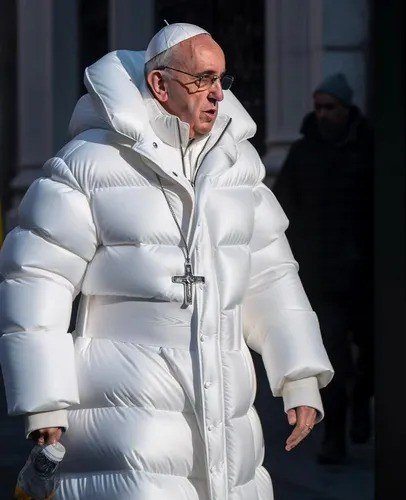

Over the previous 18 months or so, we appear to have misplaced the power to belief our eyes. Photoshop fakes are nothing new, in fact, however the introduction of generative synthetic intelligence (AI) has taken fakery to a complete new stage. Maybe the primary viral AI faux was the 2023 picture of the Pope in a white designer puffer jacket, however since then the variety of high-quality eye deceivers has skyrocketed into the numerous hundreds. And as AI develops additional, we are able to anticipate increasingly more convincing faux movies within the very close to future.

One of many first deepfakes to go viral worldwide: the Pope sporting a stylish white puffer jacket

This may solely exacerbate the already knotty downside of pretend information and accompanying photos. These may present a photograph from one occasion and declare it’s from one other, put individuals who’ve by no means met in the identical image, and so forth.

Picture and video spoofing has a direct bearing on cybersecurity. Scammers have been utilizing faux photos and movies to trick victims into parting with their money for years. They could ship you an image of a tragic pet they declare wants assist, a picture of a star selling some shady schemes, or perhaps a image of a bank card they are saying belongs to somebody you already know. Fraudsters additionally use AI-generated photos for profiles for catfishing on relationship websites and social media.

Probably the most refined scams make use of deepfake video and audio of the sufferer’s boss or a relative to get them to do the scammers’ bidding. Only in the near past, an worker of a monetary establishment was duped into transferring $25 million to cybercrooks! They’d arrange a video name with the “CFO” and “colleagues” of the sufferer — all deepfakes.

So what may be executed to take care of deepfakes or simply plain fakes? How can they be detected? That is an especially advanced downside, however one that may be mitigated step-by-step — by tracing the provenance of the picture.

Wait… haven’t I seen that earlier than?

As talked about above, there are completely different sorts of “fakeness”. Generally the picture itself isn’t faux, but it surely’s utilized in a deceptive manner. Perhaps an actual picture from a warzone is handed off as being from one other battle, or a scene from a film is offered as documentary footage. In these instances, searching for anomalies within the picture itself received’t assist a lot, however you possibly can strive looking for copies of the image on-line. Fortunately, we’ve acquired instruments like Google Reverse Picture Search and TinEye, which may also help us just do that.

Should you’ve any doubts about a picture, simply add it to one in every of these instruments and see what comes up. You may discover that the identical image of a household made homeless by fireplace, or a bunch of shelter canine, or victims of another tragedy has been making the rounds on-line for years. By the way, in the case of false fundraising, there are a couple of different pink flags to be careful for moreover the photographs themselves.

Canine from a shelter? No, from a photograph inventory

Photoshopped? We’ll quickly know.

Since photoshopping has been round for some time, mathematicians, engineers, and picture specialists have lengthy been engaged on methods to detect altered photos robotically. Some fashionable strategies embrace picture metadata evaluation and error stage evaluation (ELA), which checks for JPEG compression artifacts to establish modified parts of a picture. Many fashionable picture evaluation instruments, akin to Pretend Picture Detector, apply these strategies.

Pretend Picture Detector warns that the Pope in all probability didn’t put on this on Easter Sunday… Or ever

With the emergence of generative AI, we’ve additionally seen new AI-based strategies for detecting generated content material, however none of them are good. Listed below are a number of the related developments: detection of face morphing; detection of AI-generated photos and figuring out the AI mannequin used to generate them; and an open AI mannequin for a similar functions.

With all these approaches, the important thing downside is that none provides you 100% certainty concerning the provenance of the picture, ensures that the picture is freed from modifications, or makes it potential to confirm any such modifications.

WWW to the rescue: verifying content material provenance

Wouldn’t or not it’s nice if there have been a better manner for normal customers to examine if a picture is the true deal? Think about clicking on an image and seeing one thing like: “John took this picture with an iPhone on March 20”, “Ann cropped the sides and elevated the brightness on March 22”, “Peter re-saved this picture with excessive compression on March 23”, or “No adjustments had been made” — and all such information could be unimaginable to faux. Seems like a dream, proper? Effectively, that’s precisely what the Coalition for Content material Provenance and Authenticity (C2PA) is aiming for. C2PA contains some main gamers from the pc, pictures, and media industries: Canon, Nikon, Sony, Adobe, AWS, Microsoft, Google, Intel, BBC, Related Press, and a couple of hundred different members — mainly all the businesses that might have been individually concerned in just about any step of a picture’s life from creation to publication on-line.

The C2PA normal developed by this coalition is already on the market and has even reached model 1.3, and now we’re beginning to see the items of the economic puzzle obligatory to make use of it fall into place. Nikon is planning to make C2PA-compatible cameras, and the BBC has already printed its first articles with verified photos.

BBC talks about how photos and movies in its articles are verified

The thought is that when accountable media shops and large firms change to publishing photos in verified kind, you’ll be capable to examine the provenance of any picture instantly within the browser. You’ll see slightly “verified picture” label, and while you click on on it, a much bigger window will pop up exhibiting you what photos served because the supply, and what edits had been made at every stage earlier than the picture appeared within the browser and by whom and when. You’ll even be capable to see all of the intermediate variations of the picture.

Historical past of picture creation and enhancing

This method isn’t only for cameras; it may well work for different methods of making photos too. Companies like Dall-E and Midjourney can even label their creations.

This was clearly created in Adobe Photoshop

The verification course of relies on public-key cryptography just like the safety utilized in internet server certificates for establishing a safe HTTPS connection. The thought is that each picture creator — be it Joe Bloggs with a selected kind of digital camera, or Angela Smith with a Photoshop license — might want to receive an X.509 certificates from a trusted certificates authority. This certificates may be hardwired instantly into the digital camera on the manufacturing facility, whereas for software program merchandise it may be issued upon activation. When processing photos with provenance monitoring, every new model of the file will comprise a considerable amount of further info: the date, time, and placement of the edits, thumbnails of the unique and edited variations, and so forth. All this shall be digitally signed by the creator or editor of the picture. This manner, a verified picture file can have a series of all its earlier variations, every signed by the one that edited it.

This video incorporates AI-generated content material

The authors of the specification had been additionally involved with privateness options. Generally, journalists can’t reveal their sources. For conditions like that, there’s a particular kind of edit known as “redaction”. This enables somebody to switch a number of the details about the picture creator with zeros after which signal that change with their very own certificates.

To showcase the capabilities of C2PA, a group of check photos and movies was created. You may try the Content material Credentials web site to see the credentials, creation historical past, and enhancing historical past of those photos.

The Content material Credentials web site reveals the total background of C2PA photos

Pure limitations

Sadly, digital signatures for photos received’t remedy the fakes downside in a single day. In any case, there are already billions of photos on-line that haven’t been signed by anybody and aren’t going wherever. Nevertheless, as increasingly more respected info sources change to publishing solely signed photos, any picture with no digital signature will begin to be considered with suspicion. Actual photographs and movies with timestamps and placement information shall be nearly unimaginable to move off as one thing else, and AI-generated content material shall be simpler to identify.